Safely Apply AI in Engineering

This is Dr. He Wen, the PI of AI Safety Lab, Indiana State University.

My former supervisors/collaborators are:

Dr. Faisal Khan, Director of Mary Kay O’conner Process Safety Center at Texas A&M University (PhD)

and Dr. Simaan AbouRizk, Dean of Engineering at University of Alberta (postdoc).

Much of my research centers around the concept of conflict, particularly within emerging AI systems. I may be among the first to formally propose and mathematically model the notion of human–AI conflict, a topic that extends from ergonomics, human factor, and human-machine interaction.

At a deeper level, my core research definitely focus is on safety—its fundamental principles, theoretical frameworks, and practical applications. I am especially interested in probabilistic risk assessment, with a specialization in subjective probability estimation.

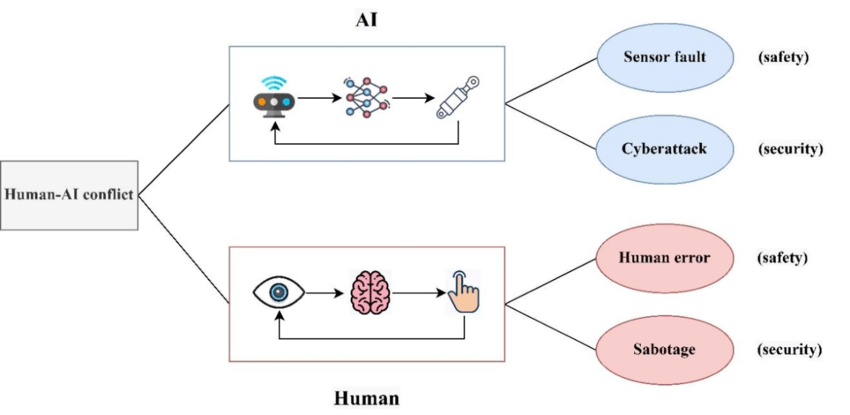

Human-AI Collaboration and Conflict

In preceding academic discourse, scholars investigated human error and machine failure as separate phenomena. However, in contemporary academia, the prevalent focus lies on human-machine collaborative systems within industrial contexts. An emergent concern within this paradigm is conflict, which differs from traditional issues such as fault, failure, or malfunction due to its absence of direct human involvement. Historically termed as automation confusion or surprise, we have redefined this concept as human-machine conflict, offering a precise academic definition and accompanying mathematical model. Currently, our academic inquiry is transitioning from conventional automated machinery towards the realm of artificial intelligence (AI).

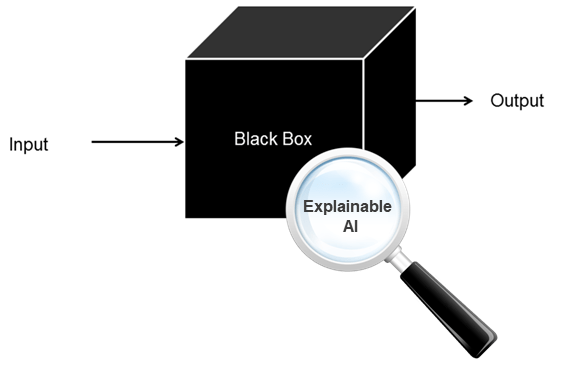

Explainable AI in engineering practice

The opaqueness of AI presents a significant obstacle to its adoption in industrial settings, where reliability is paramount. To address this challenge, it is imperative to move beyond solely assessing the performance of AI models based on output results and instead focus on elucidating their inner workings. Techniques such as Local Interpretable Model-agnostic Explanations (LIME), Shapley Additive Explanations (SHAP), and other algorithmic approaches offer avenues to deconstruct and comprehend AI models, enhancing transparency and accountability. By embracing explainable AI (XAI), organizations can bridge the gap between the complexity of AI systems and the need for trust in industrial applications, fostering confidence among users and stakeholders.

Accident causality reasoning

In the realm of safety disciplines, accident models and the study of accident causation have traditionally constituted fundamental pillars. Presently, however, a comprehensive deconstruction of causation by humans remains incomplete. The emergence of causal inference and causal reinforcement learning heralds the potential for machine reasoning to surpass human cognitive processes. Consequently, there arises a critical imperative to delve deeper into comparative analyses of human and machine reasoning mechanisms, with meticulous consideration of their inherent disparities to preempt the emergence of novel risks. This becomes particularly pertinent in scenarios where AI significantly outstrips human intellect, underlining the importance of maintaining human control over advanced AI systems.

Safe AI design in industrial systems

Enterprise-level generative AI is currently being developed and deployed into management processes and even in industrial systems, which may create new problems. Especially when combined with embodied intelligence, the new generation of robots may have unpredictable thinking and behaviors. Thus, safety AI design should be cautious, and seriously considered.

Emergency response to abnormal AI

Frequent portrayals of robot awakenings in movies and television suggest this scenario may not be distant. When faced with such conflicts, the immediate response becomes a new possibility. Presently, we’re formulating particular guidelines for AI to follow, distinct from conventional natural language principles like the three laws of robotics.

Risk simulation and mitigation

Despite significant research efforts directed towards risk simulation, achieving real-time dynamic risk simulation remains elusive. The current emphasis is on amalgamating existing knowledge graphs, causal reasoning, and deep learning to create advanced commercial risk monitoring software. Development in this area is urgently required.